Inference.ai: Revolutionizing GPU Cloud Services

Inference.ai stands out as a leading GPU cloud provider, offering a plethora of benefits to users. It provides access to a wide range of NVIDIA GPU SKUs, ensuring that users have access to the latest and most powerful hardware for their AI projects. This is a significant advantage, as having access to state-of-the-art GPUs can greatly enhance the performance and efficiency of AI models.

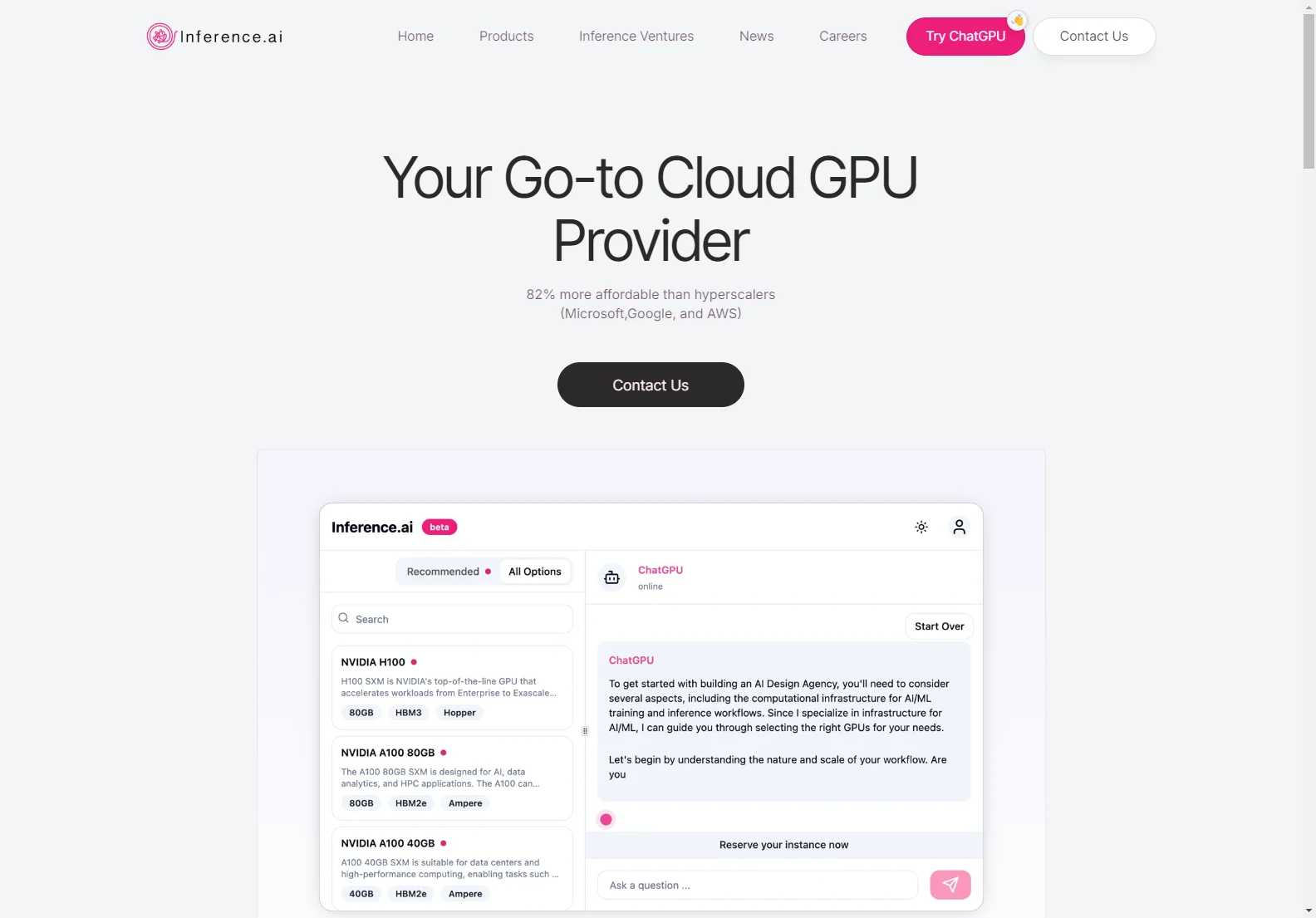

One of the key features of Inference.ai is its cost-effectiveness. It is 82% more affordable than hyperscalers like Microsoft, Google, and AWS, making it an attractive option for businesses and individuals looking to optimize their computing resources without breaking the bank.

The global distribution of Inference.ai's data centers is another notable aspect. This ensures low-latency access to computing resources from anywhere in the world, which is crucial for applications that require real-time processing or collaboration across different geographic locations.

In addition to the hardware and cost advantages, Inference.ai offers several benefits for model development. The accelerated training speed allows for rapid experimentation and iteration during the model development process. Users can quickly test different model architectures, hyperparameters, and training techniques to find the optimal configuration for their AI models. The scalability of the GPU cloud services also enables users to easily adjust resources based on the size and complexity of their datasets or models.

By using Inference.ai's GPU cloud, users can focus on model development, experimentation, and optimization without the hassle of managing and maintaining the underlying infrastructure. This allows data scientists and developers to devote more time and energy to creating innovative AI solutions.

Overall, Inference.ai is a game-changer in the world of GPU cloud services, offering a combination of advanced hardware, cost savings, global accessibility, and streamlined model development capabilities.