LM Studio: Empowering Local LLM Usage

LM Studio is a revolutionary platform that brings the power of local Language Models (LLMs) to users. It offers a plethora of features that make it a standout in the world of AI.

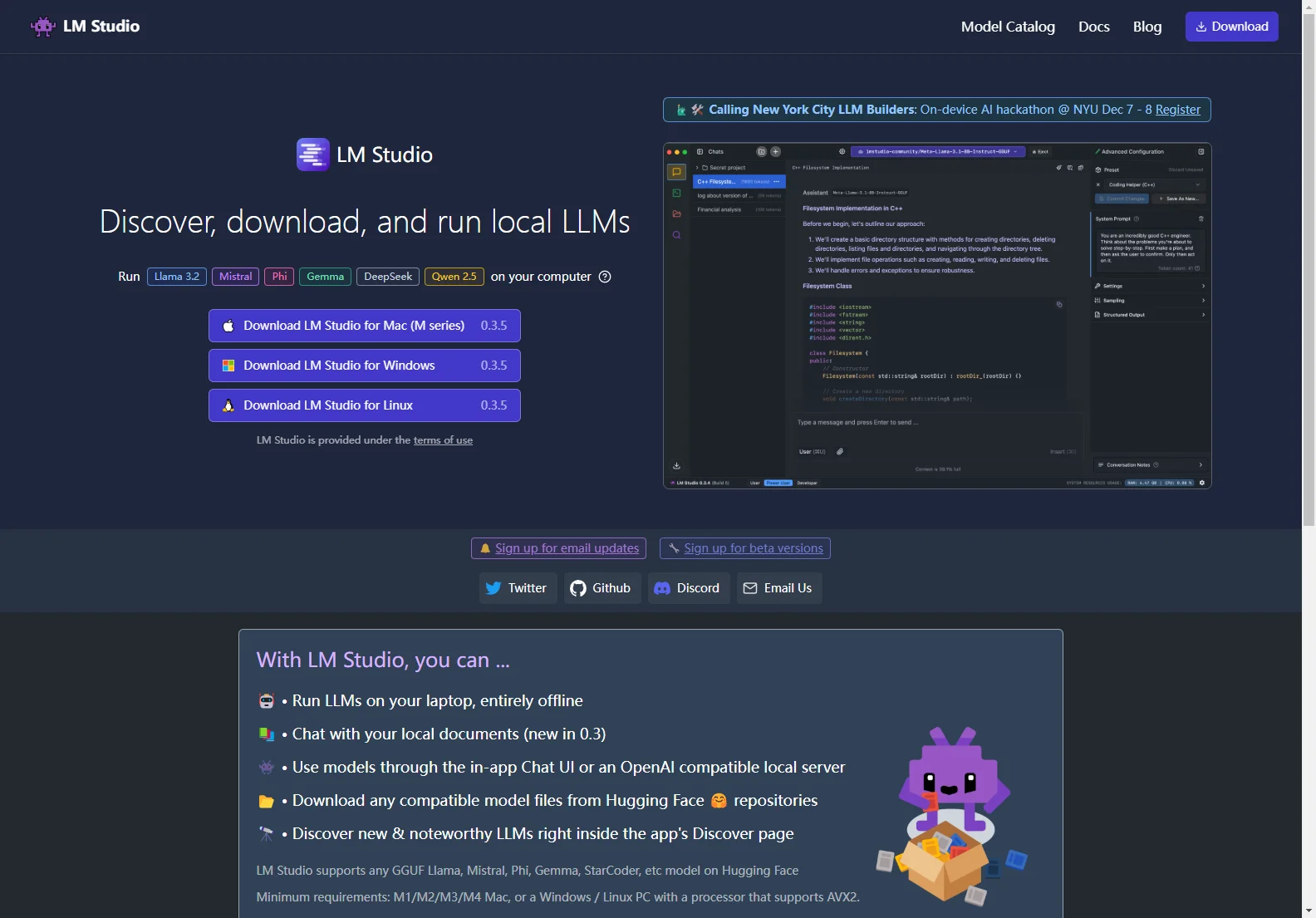

The Overview of LM Studio reveals its ability to allow users to run LLMs on their laptops entirely offline. This offline functionality ensures privacy and independence from the internet, making it a reliable choice for users who value data security.

The Core Features of LM Studio are truly impressive. Users can chat with their local documents, a feature that is new in version 0.3. They can also use models through the in-app Chat UI or an OpenAI compatible local server. Additionally, the platform enables users to download any compatible model files from Hugging Face repositories, opening up a world of possibilities for users to explore and utilize different models.

In terms of Basic Usage, LM Studio is designed to be user-friendly. It supports a wide range of models on Hugging Face, including Llama, Mistral, Phi, Gemma, StarCoder, and more. The minimum requirements are relatively straightforward, making it accessible to a broad range of users with M1/M2/M3/M4 Mac, or a Windows / Linux PC with a processor that supports AVX2.

LM Studio is not only a powerful tool but also a privacy-conscious one. It is designed to ensure that users' data remains private and local to their machines, a key feature in an era where data privacy is of utmost importance.

Overall, LM Studio is a game-changer in the field of local LLM usage, offering a seamless and powerful experience for users.