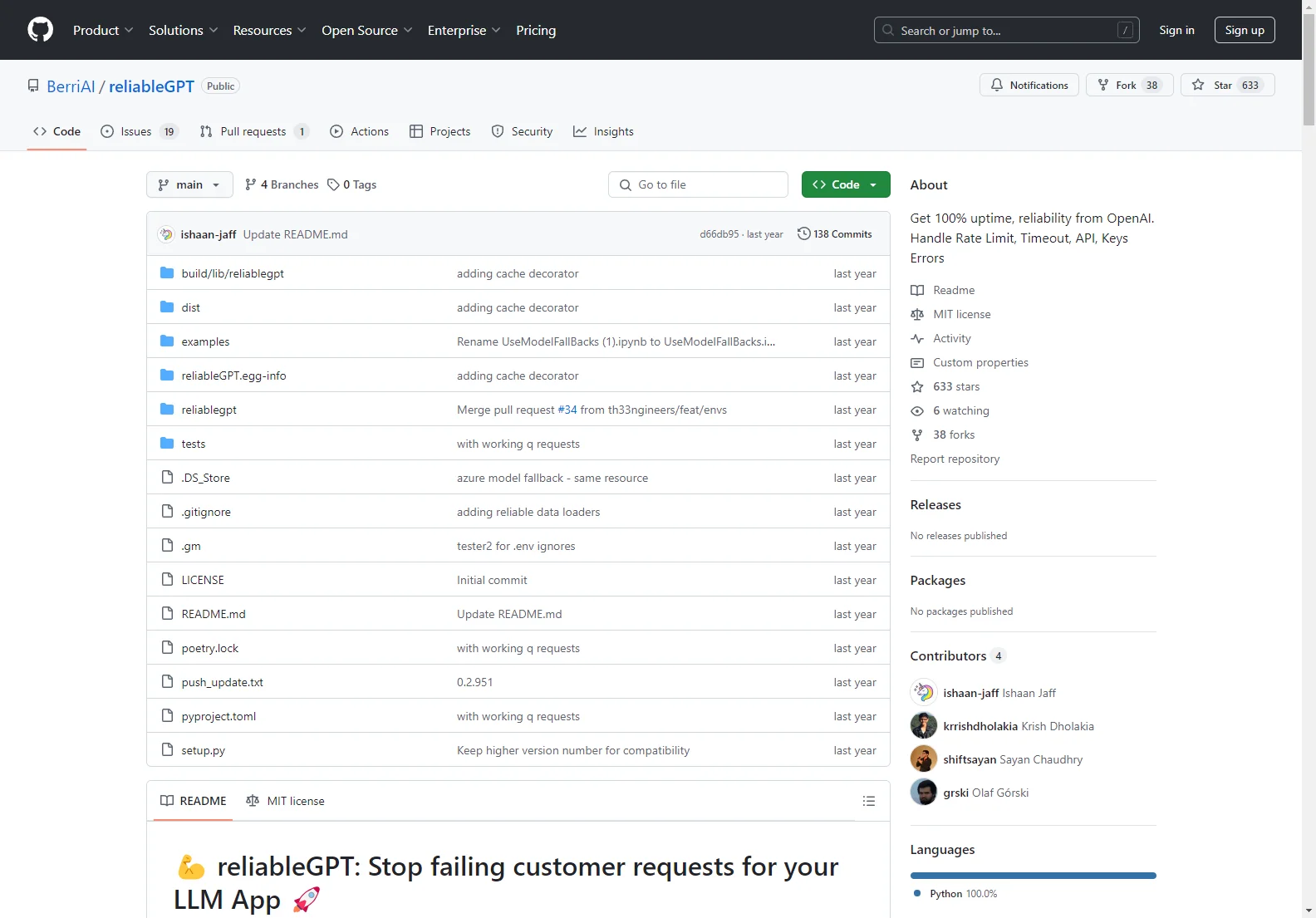

h2 BerriAI/reliableGPT: Enhancing OpenAI Uptime and Error Handling

BerriAI/reliableGPT is a powerful tool designed to ensure 100% uptime and reliability from OpenAI. It effectively handles a variety of errors including Rate Limit, Timeout, API, and Keys Errors.

Core Features:

- Retry Mechanism: When a request to an LLM app fails, reliableGPT retries with alternate models such as GPT-4, GPT3.5, GPT3.5 16k, and text-davinci-003.

- Context Window Handling: Automatically retries requests with models with larger context windows for Context Window Errors.

- Cached Response: Provides caching (hosted - not in-memory) as a backup in case model fallback and retries fail. This is useful for request timeout and task queue depth issues.

- Fallback Strategy: Allows users to specify a fallback strategy for handling failed requests. For example, users can define fallback_strategy=['gpt-3.5-turbo', 'gpt-4', 'gpt-3.5-turbo-16k', 'text-davinci-003'], and reliableGPT will retry with the specified models in the given order until it receives a valid response.

- Backup Tokens and Keys: Enables users to pass backup keys and tokens to handle Invalid API Key errors and key rotations.

Basic Usage:

- Installation: To get started, simply run

pip install reliableGPT. - Integration: The core package is easy to integrate. For example, when integrating with OpenAI, Azure OpenAI, Langchain, or LlamaIndex, you can use the following code:

from reliablegpt import reliableGPT; openai.ChatCompletion.create = reliableGPT(openai.ChatCompletion.create, user_email='[email protected]').

In conclusion, BerriAI/reliableGPT is a comprehensive solution for ensuring the smooth operation of LLM applications and minimizing the impact of errors. It offers a range of features and usage scenarios that make it an invaluable tool for developers and businesses relying on OpenAI.