All in one LLM Token Counter: Empowering Efficient Token Management

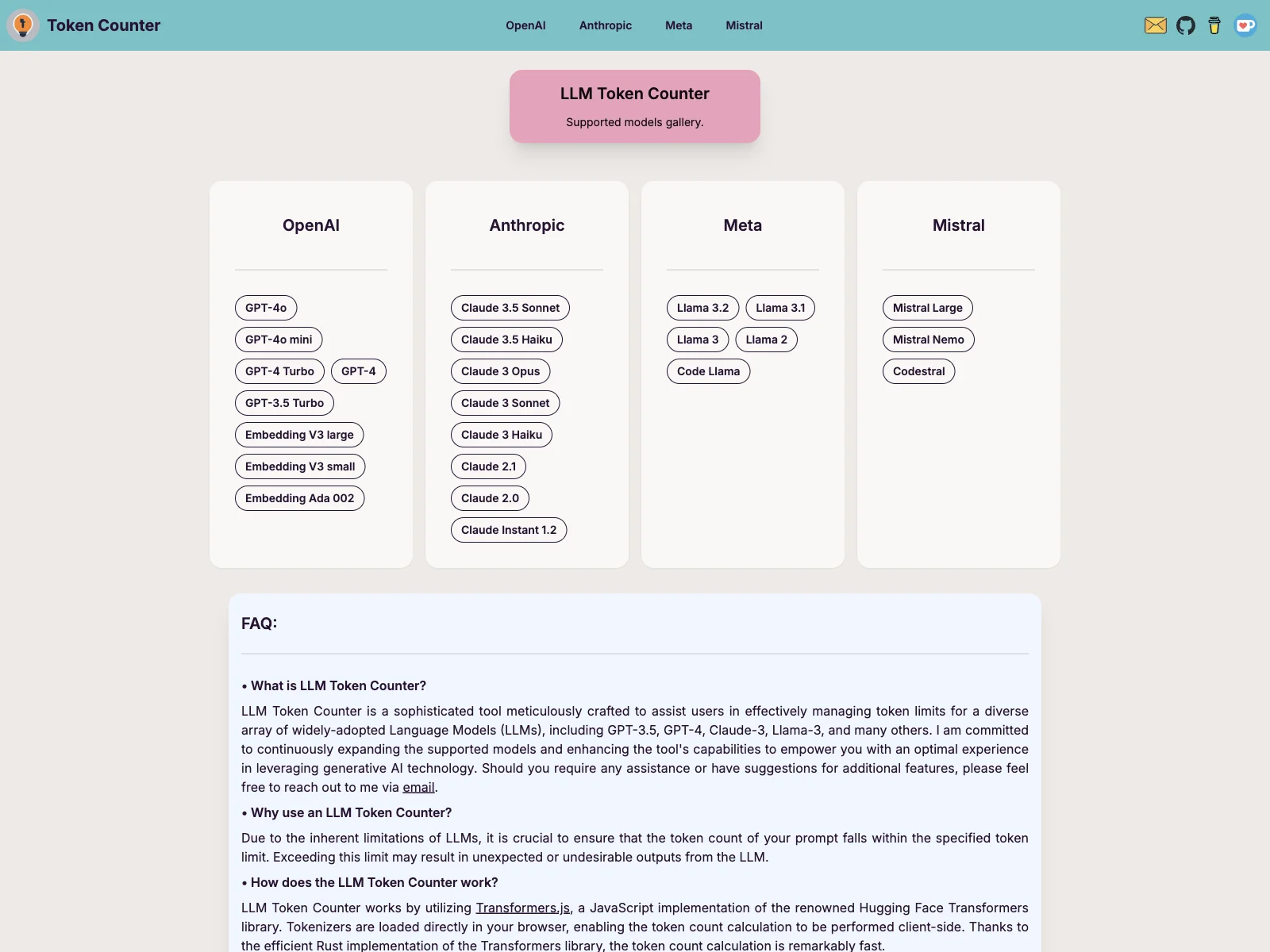

The All in one LLM Token Counter is a game-changer in the world of Language Models. It is a meticulously designed tool that offers a seamless solution for users to manage token limits of a wide range of widely-adopted Language Models.

In the Overview section, it's clear that this tool is essential for those working with LLMs. It addresses the inherent limitations of these models by ensuring that the token count of prompts remains within the specified limits. This is crucial as exceeding the limit can lead to unexpected and undesirable outputs.

The Core Features of the LLM Token Counter are impressive. It utilizes Transformers.js, a JavaScript implementation of the renowned Hugging Face Transformers library. Tokenizers are loaded directly in the user's browser, enabling the token count calculation to be performed client-side. This not only ensures the security and confidentiality of the user's prompt but also makes the calculation remarkably fast thanks to the efficient Rust implementation of the Transformers library.

When it comes to Basic Usage, the tool is user-friendly. Users can easily input their text and get an accurate token count. This helps them optimize their prompts and ensure they are within the token limits of the chosen Language Model.

In comparison to other similar tools in the market, the All in one LLM Token Counter stands out for its simplicity and efficiency. It provides a straightforward solution to a common problem faced by users of Language Models.

Overall, the All in one LLM Token Counter is a valuable asset for anyone working with LLMs, offering a reliable and efficient way to manage token limits.