Ava PLS: Empowering Local Language Model Execution

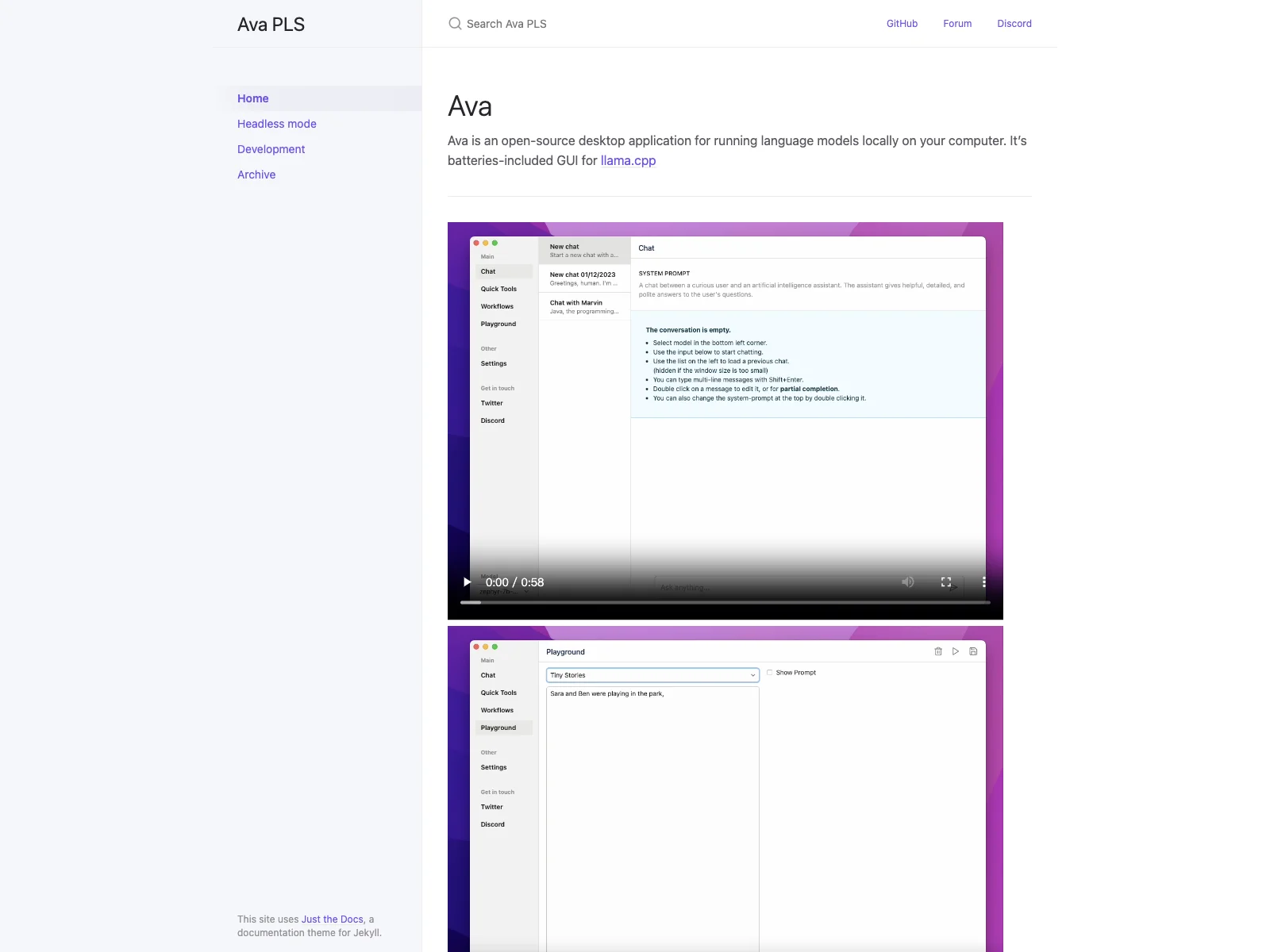

Ava PLS is an innovative open-source desktop application that has been making waves in the realm of running language models locally on your computer. It provides a convenient and user-friendly experience with its batteries-included GUI for llama.cpp.

Overview

Ava PLS stands out as a tool that brings the power of language models right to your desktop. Instead of relying on cloud-based services, you can have the flexibility and privacy of running these models on your own machine. This is especially beneficial for those who may have concerns about data privacy or want to have more control over the execution environment.

Core Features

One of the key features is its seamless integration with llama.cpp. The GUI simplifies the process of interacting with the language model, making it accessible even to those who may not be highly technical. Additionally, the tech stack comprising Zig, C++ (llama.cpp), SQLite, Preact, Preact Signals, and Twind ensures a stable and efficient performance.

The ability to download the latest artifacts from Github Actions or build it yourself using commands like "zig build run" or "zig build run -Dheadless=true" gives users the freedom to customize and set up the application according to their specific needs.

Basic Usage

Getting started with Ava PLS is relatively straightforward. Once you have either downloaded the pre-built version or built it yourself, you can launch the application and start exploring the capabilities of the language model it hosts. The GUI guides you through the various options and settings, allowing you to make the most of the local language model execution.

Compared to some existing cloud-based language model services, Ava PLS offers a more localized and private experience. While cloud services may have their own advantages in terms of scalability and ease of access for some users, Ava PLS caters to those who prioritize having the language model running on their own hardware.

In conclusion, Ava PLS is a great option for anyone looking to harness the power of language models in a local and controlled environment, with its user-friendly interface and powerful underlying tech stack.