GET3D: Revolutionizing 3D Shape Generation

In the realm of 3D content creation, GET3D has emerged as a remarkable tool. It is a generative model that has been designed to learn from images and produce high-quality 3D textured shapes.

Overview

GET3D aims to address the growing need for content creation tools in industries that are increasingly focused on modeling massive 3D virtual worlds. It stands out as it directly generates explicit textured 3D meshes with complex topology, rich geometric details, and high fidelity textures. Unlike many prior works on 3D generative modeling which had limitations such as lacking geometric details, being restricted in mesh topology, or not supporting textures properly, GET3D overcomes these hurdles.

Core Features

One of the key features of GET3D is its ability to generate diverse shapes with arbitrary topology. It can produce a wide range of 3D objects, from cars, chairs, animals, motorbikes, and human characters to buildings. The model also achieves a good disentanglement between geometry and texture. This means that by changing the latent codes for geometry and texture separately, one can observe distinct variations in the generated shapes. For example, in each row, shapes generated from the same geometry latent code can show different appearances when the texture latent code is changed, and vice versa.

Another notable feature is the latent code interpolation. By applying a random walk in the latent space, GET3D can generate a smooth transition between different shapes for all categories. It can also locally perturb the latent code by adding a small noise to generate similar looking shapes with slight differences locally.

GET3D is also capable of text-guided shape generation. Following the approach of StyleGAN-NADA, when users provide a text, the model finetunes its 3D generator by computing the directional CLIP loss on the rendered 2D images and the provided texts. This enables the generation of a large amount of meaningful shapes based on text prompts from the users.

Basic Usage

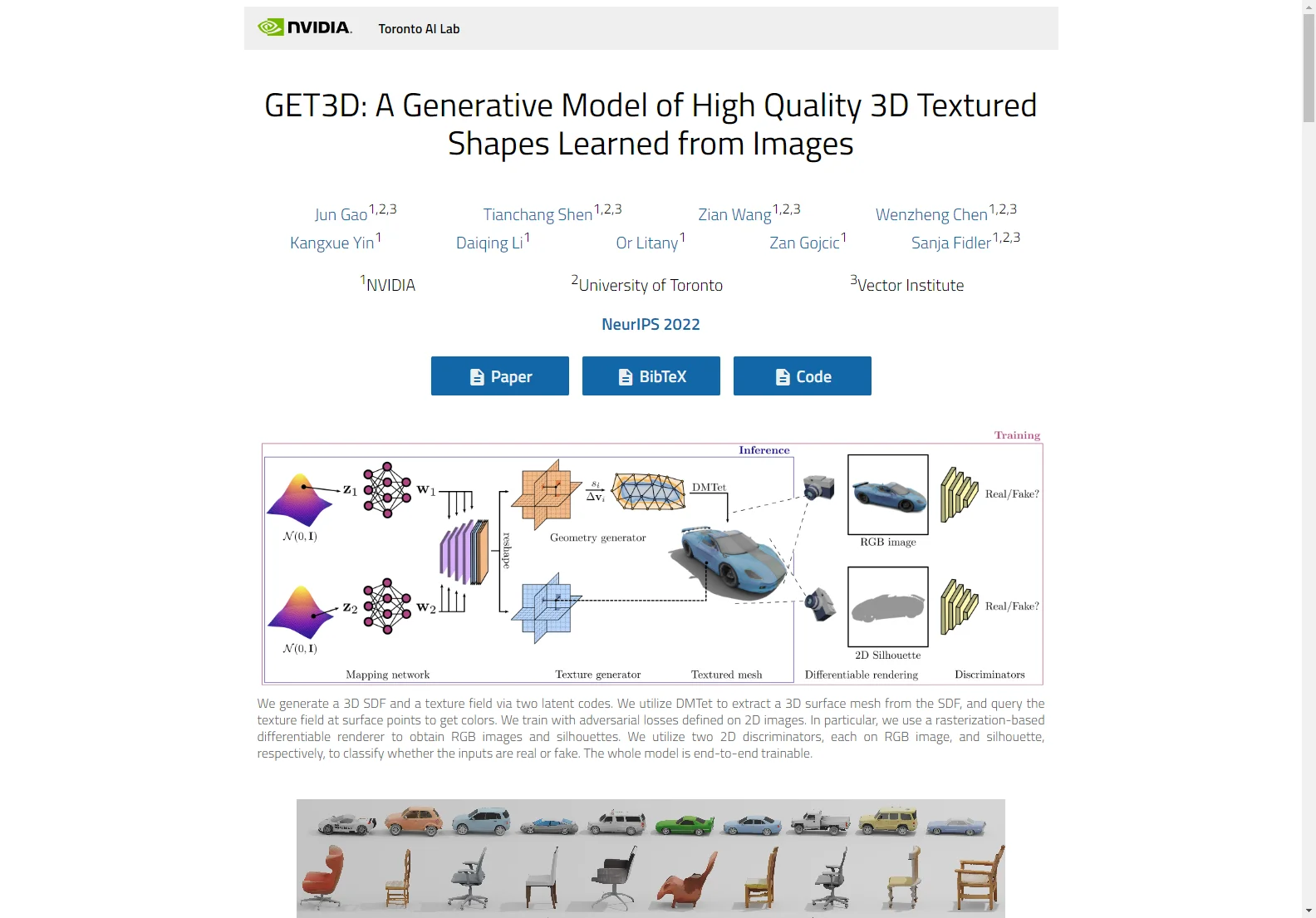

To utilize GET3D, one would typically start with a collection of 2D images. The model is trained with adversarial losses defined on these 2D images. It uses a rasterization-based differentiable renderer to obtain RGB images and silhouettes. Two 2D discriminators, one for RGB images and the other for silhouettes, are utilized to classify whether the inputs are real or fake. The whole model is end-to-end trainable, making it relatively straightforward to work with once the initial setup and training process are understood.

In comparison to existing 3D generative models, GET3D offers significant improvements. It provides a more comprehensive solution for generating high-quality 3D textured meshes that can be directly used in downstream applications such as 3D rendering engines. Overall, GET3D is a powerful tool that is pushing the boundaries of 3D content creation in the field of artificial intelligence.