Reflection 70B: The Future of AI Language Models

Reflection 70B is a revolutionary open-source LLM based on Llama 70B. It stands out with its state-of-the-art self-correction feature and pioneering training techniques. This enables it to deliver more accurate and reliable results compared to other LLMs.

The 'Reflection-Tuning' methodology employed by Reflection 70B allows for self-correction during generation. It significantly enhances the model's accuracy and reliability, setting a new standard in AI performance. The model's ability to identify and rectify its own reasoning errors in real-time is a standout feature. Using special tokens, it facilitates structured interaction and correction during the reasoning process.

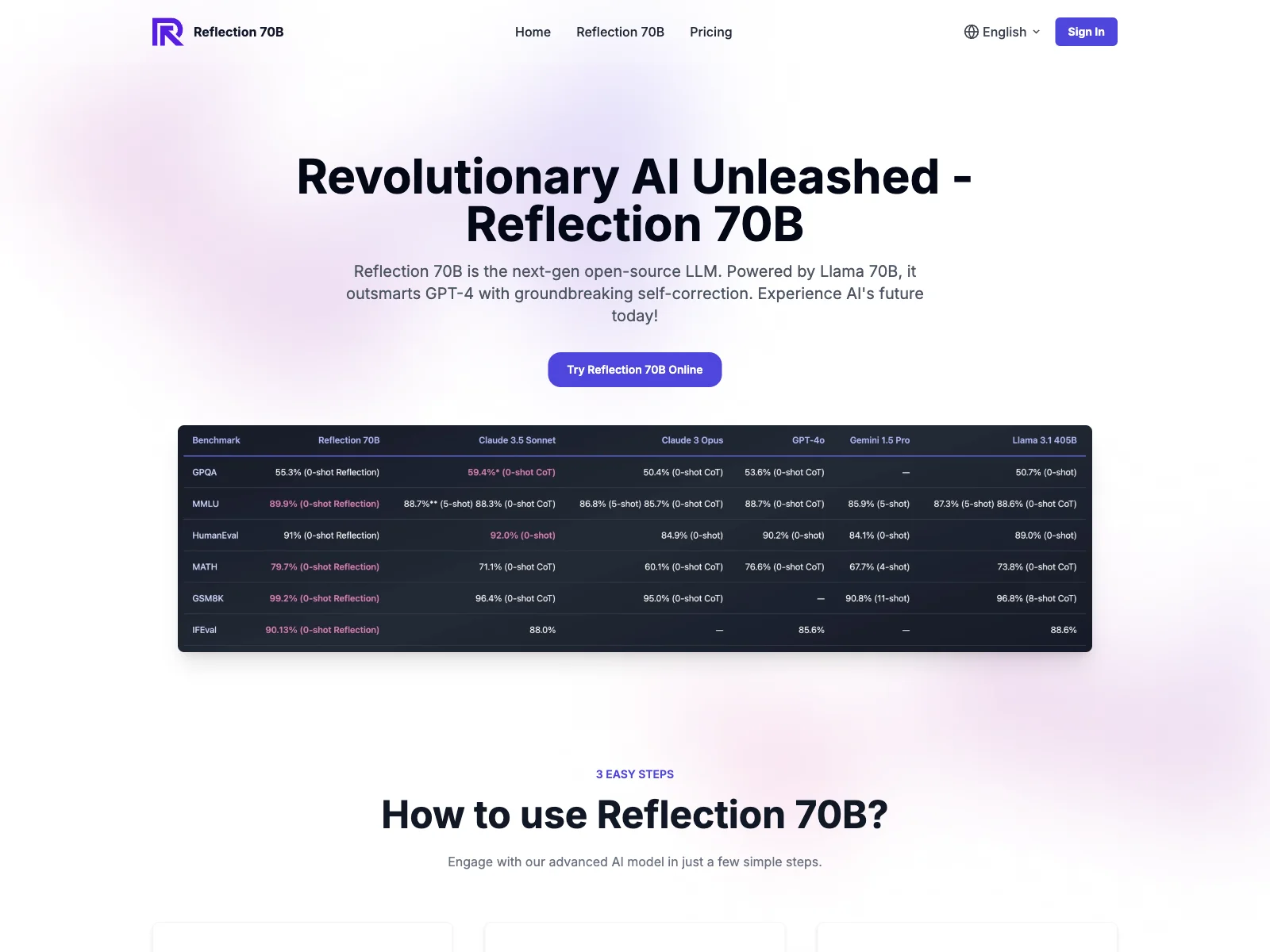

Built upon Meta's Llama 3.1 70B Instruct, Reflection 70B maintains compatibility with existing tools and pipelines, making it easy to integrate into various workflows. It has demonstrated exceptional results across various benchmarks, surpassing GPT-4 and even the 405B version of Llama 3.1 in certain aspects. Its high-precision task mastery makes it invaluable for complex problem-solving scenarios, and its advanced coding capabilities make it a great asset for developers and programmers.

In summary, Reflection 70B is a game-changer in the field of AI language models, offering a wide range of capabilities and benefits to users.