DreamFusion: Revolutionizing Text-to-3D Synthesis

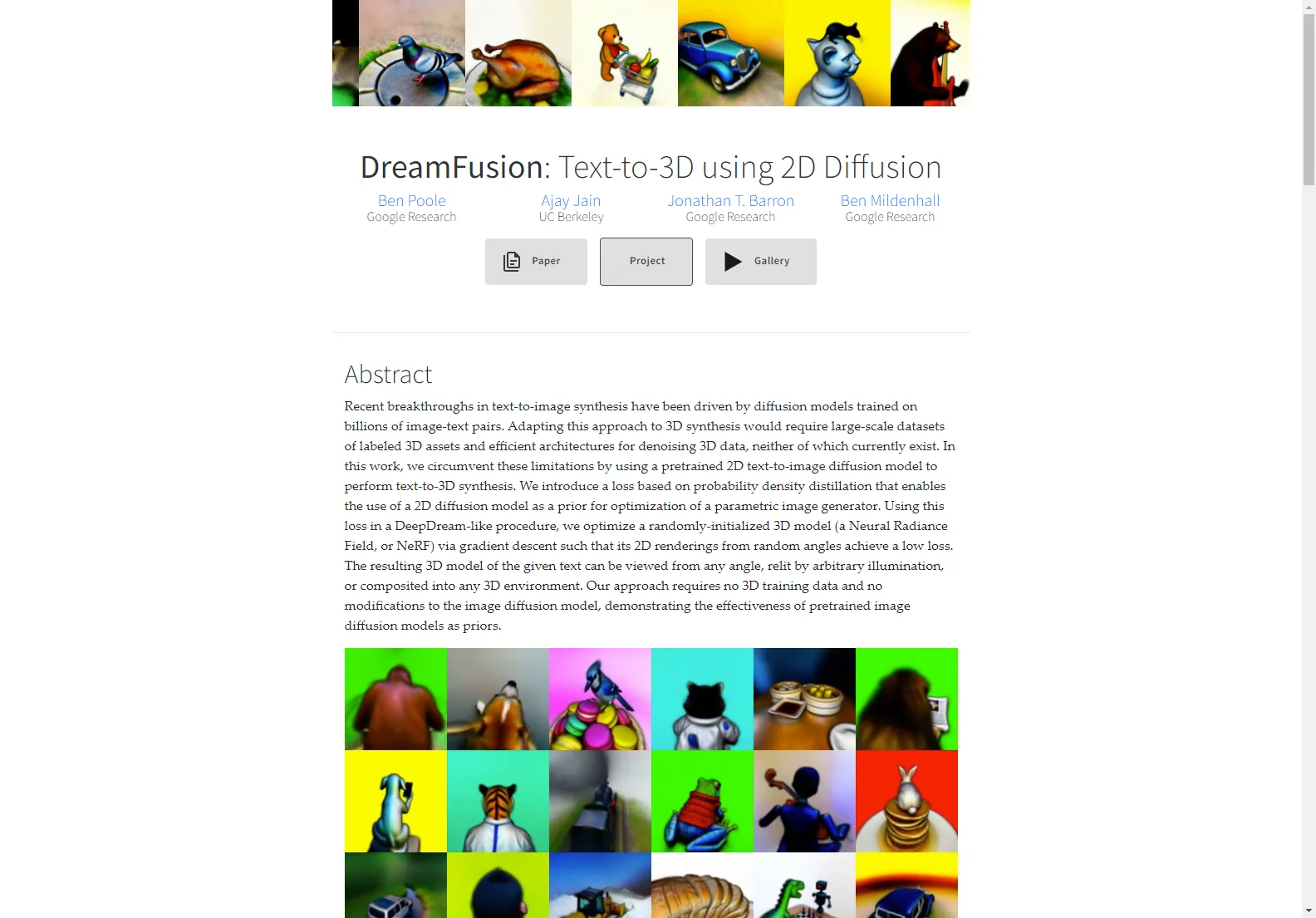

DreamFusion is a remarkable AI tool that utilizes a pretrained 2D text-to-image diffusion model to perform text-to-3D synthesis. This innovative approach circumvents the limitations of traditional 3D synthesis methods, which often require large-scale datasets of labeled 3D assets and efficient architectures for denoising 3D data. With DreamFusion, users can generate 3D objects with high-fidelity appearance, depth, and normals simply by providing a text caption.

The core of DreamFusion lies in its use of a loss based on probability density distillation. This enables the 2D diffusion model to act as a prior for the optimization of a parametric image generator. Through a DeepDream-like procedure, a randomly-initialized 3D model, such as a Neural Radiance Field (NeRF), is optimized via gradient descent. The result is a 3D model that can be viewed from any angle, relit by arbitrary illumination, or composited into any 3D environment.

One of the key advantages of DreamFusion is that it requires no 3D training data and no modifications to the image diffusion model. This demonstrates the effectiveness of pretrained image diffusion models as priors and opens up new possibilities for 3D content creation. Given a caption, DreamFusion generates relightable 3D objects with impressive visual quality and geometric detail.

DreamFusion also offers additional features such as the ability to export the generated NeRF models to meshes using the marching cubes algorithm. This makes it easy to integrate the 3D models into 3D renderers or modeling software, further enhancing their practical applications.

In summary, DreamFusion is a game-changer in the field of text-to-3D synthesis, offering a novel and efficient solution for creating high-quality 3D content from text descriptions.