LlamaChat: Revolutionizing Local AI Chatting

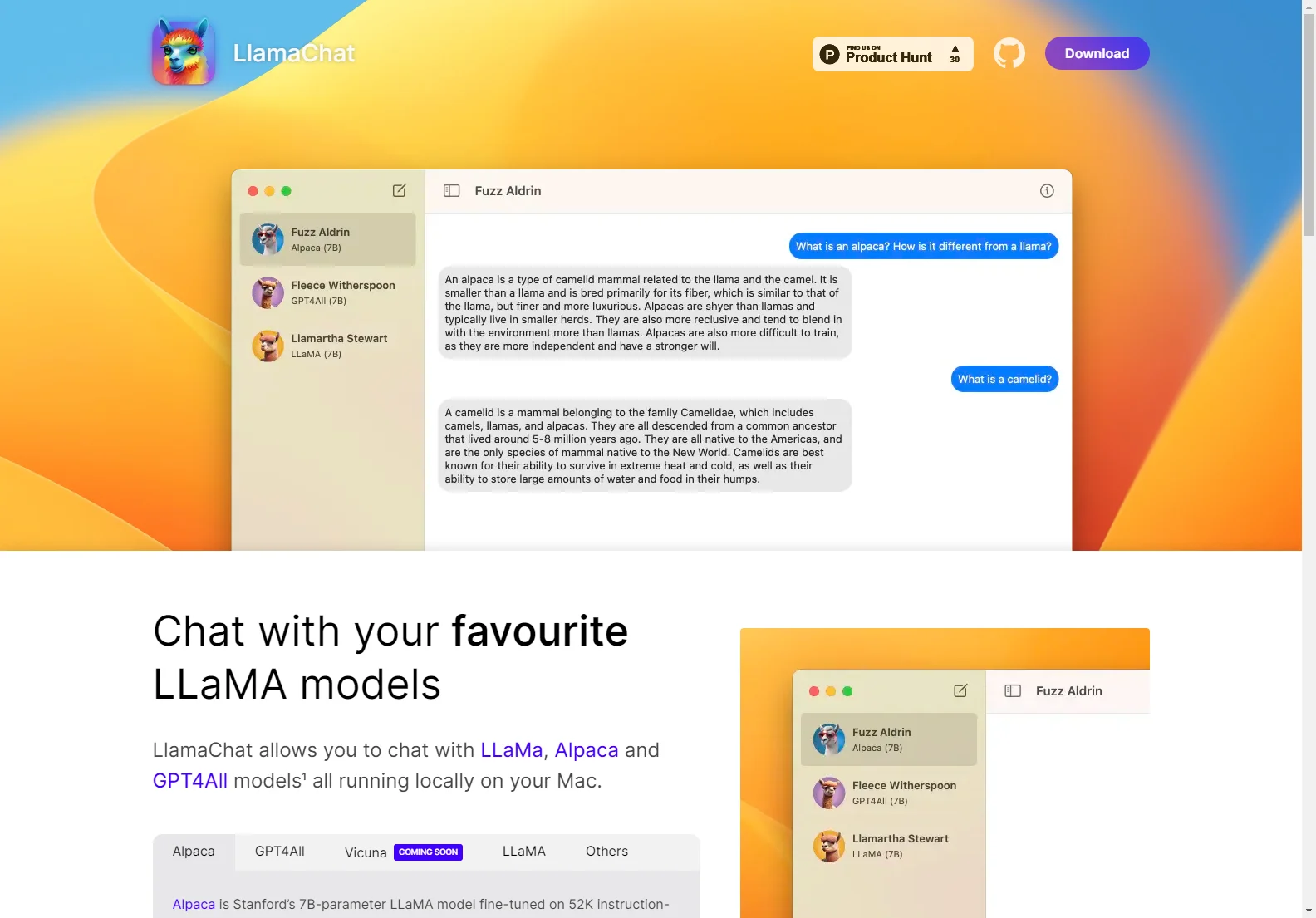

LlamaChat is a remarkable tool that brings a new dimension to the world of AI-powered conversations. It allows users to chat with LLaMa, Alpaca, and GPT4All models, all running locally on a Mac. This means users have the convenience of accessing advanced language models right at their fingertips, without the need for a constant internet connection.

One of the standout features of LlamaChat is its ability to fine-tune the LLaMA model. By using 52K instruction-following demonstrations generated from OpenAI’s text-davinci-003, the chatbot-like experience is enhanced compared to the original LLaMA model. This fine-tuning process opens up a world of possibilities for more natural and engaging conversations.

Another great aspect of LlamaChat is its ease of use when it comes to converting models. It can import raw published PyTorch model checkpoints directly or pre-converted.ggml model files. This simplifies the process of integrating different models into the platform, making it more accessible for users.

LlamaChat is also fully open-source, powered by open-source libraries such as llama.cpp and llama.swift. This not only ensures that the tool is freely available to the community but also allows for continuous improvement and innovation through contributions from developers around the world.

It's important to note that LlamaChat is not distributed with any model files. Users are responsible for obtaining and integrating the appropriate model files in accordance with the respective terms and conditions set by the providers. Despite this, LlamaChat remains a powerful and valuable tool for those interested in exploring the potential of AI conversations.

In conclusion, LlamaChat is a game-changer in the field of AI conversation. Its local model running capabilities, fine-tuning features, model conversion ease, and open-source nature make it a must-have for users looking to enhance their communication and research experiences with AI.