Introduction to PromptPoint

PromptPoint is an innovative AI tool that has been making waves in the world of prompt engineering. It offers a seamless experience for users to design, test, and deploy prompts with remarkable ease and speed.

Overview

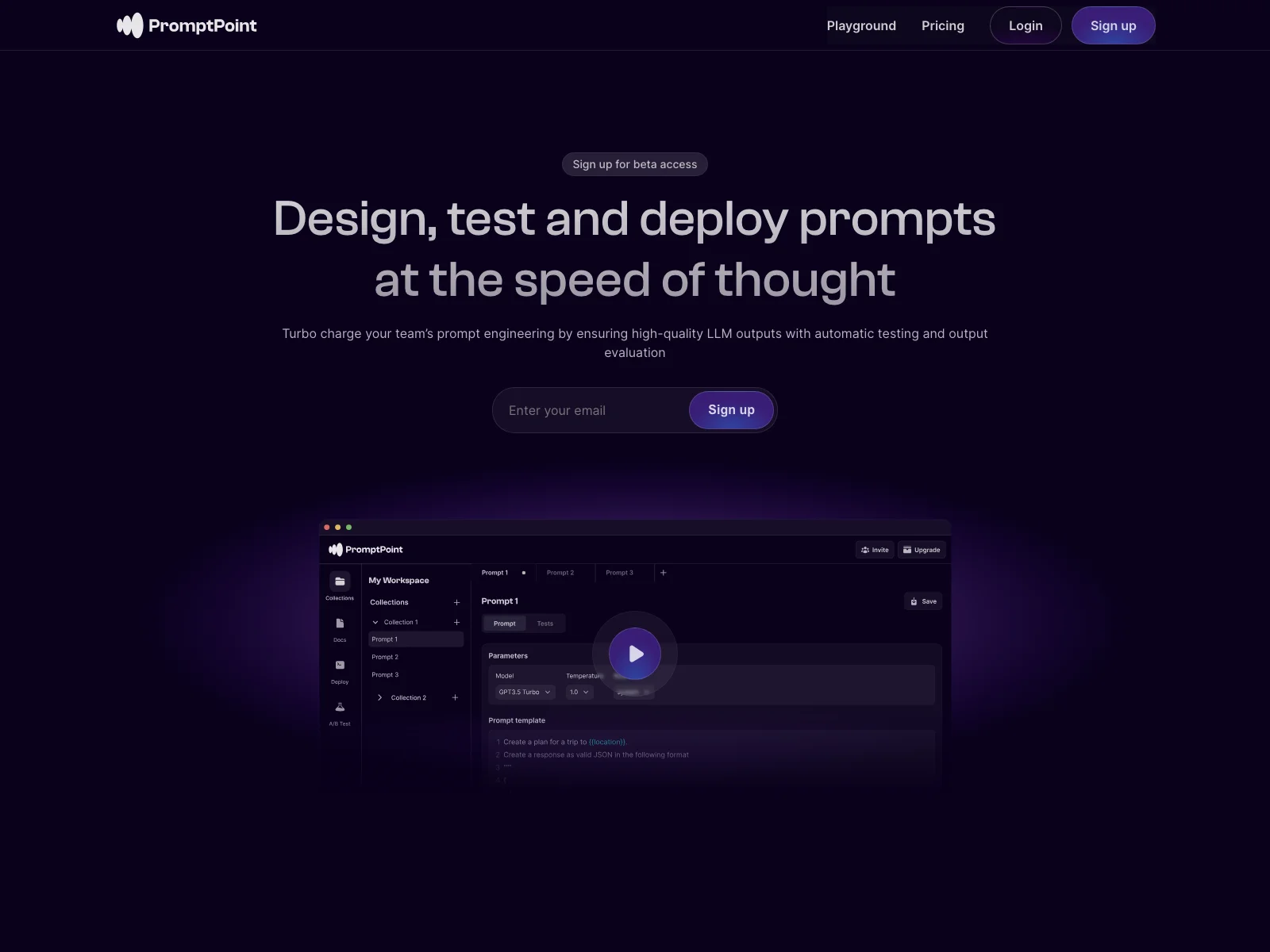

The platform allows you to create and organize your prompts in a highly efficient manner. You can template, save, and organize your prompt configurations, making the process of designing and organizing prompts a breeze. This means that whether you're a seasoned professional or just starting out, you can quickly get to grips with creating effective prompts.

Core Features

One of the standout features is its ability to make the non-deterministic predictable. By ensuring high-quality LLM outputs with automatic testing and output evaluation, it turbo charges your team's prompt engineering efforts. You can automatically run tests and get comprehensive results in seconds, which not only saves you time but also elevates your efficiency.

Another great aspect is its flexibility in connecting with hundreds of large language models. This allows you to unlock access to the entire LLM-universe, maintaining flexibility in a many-model world. It means you can leverage the power of different models depending on your specific needs.

Basic Usage

Getting started with PromptPoint is straightforward. You can access the prompt playground and try it out for free, without even needing to enter a credit card or sign up. This gives you a chance to explore its capabilities before committing.

Once you're ready to dive deeper, you can structure your prompt configurations with precision and then instantly deploy them for use in your own software applications. And with its natively no-code platform, anyone in your team, regardless of their technical expertise, can write and test prompt configurations.

In conclusion, PromptPoint is a powerful tool that bridges the gap between technical execution and real-world relevance, enabling teams to make the most of prompt engineering and achieve better results with their LLM-powered applications.