Introduction to StableBeluga2

StableBeluga2 is an exciting addition to the world of AI language models. Developed by Stability AI, it is based on the Llama2 70B model and has been fine-tuned on an Orca style Dataset.

Core Features

One of the key features of StableBeluga2 is its ability to follow instructions extremely well. It can generate text in English, making it useful for various applications such as writing poems, stories, or any other form of text. The model is trained using supervised fine-tuning on mixed-precision (BF16) with AdamW optimization, which contributes to its performance.

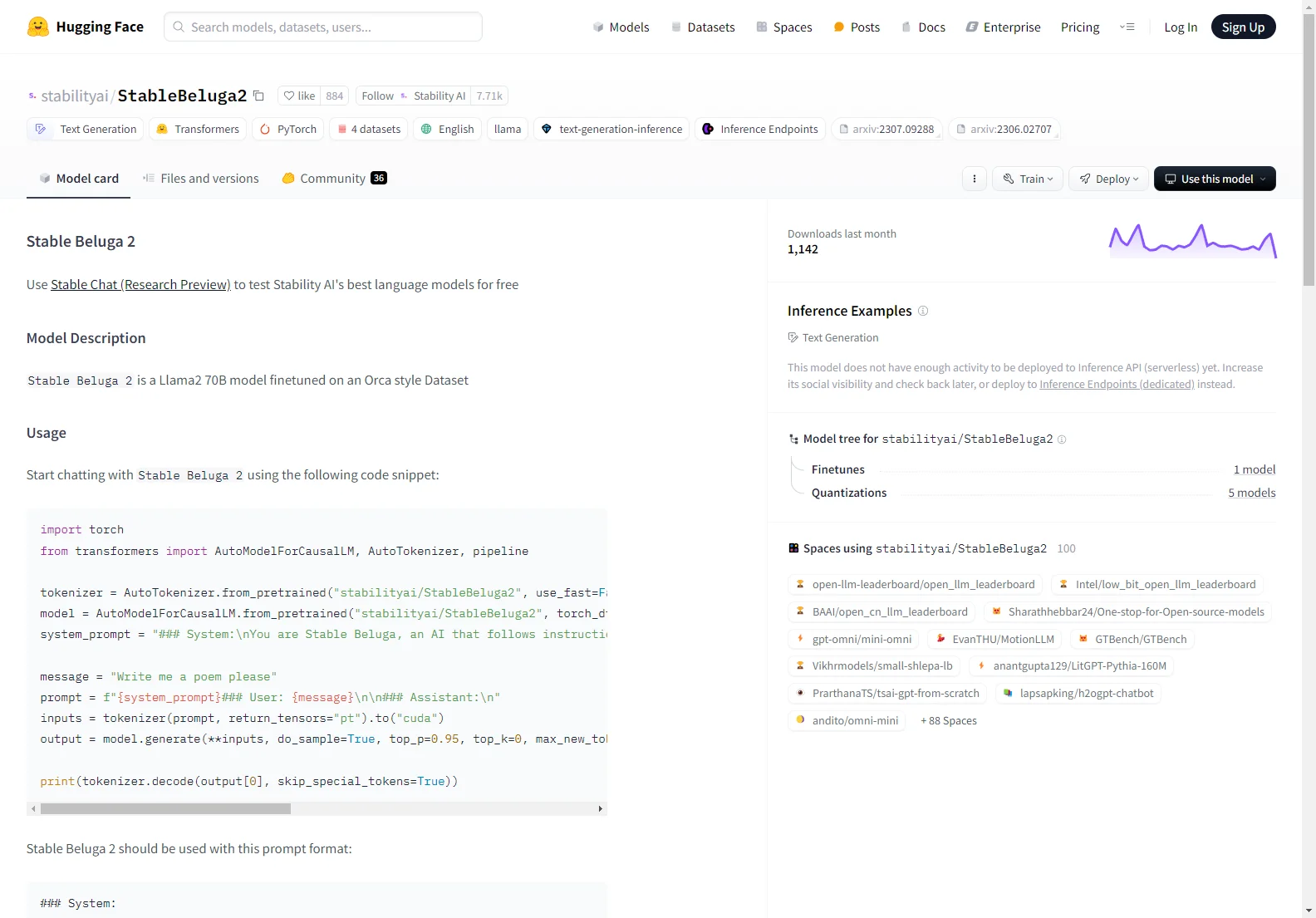

Basic Usage

To start using StableBeluga2, you can follow a simple code snippet. First, import the necessary libraries like torch and from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline. Then, load the tokenizer and model using the appropriate pretrained paths. After that, you can define a system prompt and a user message, format the prompt correctly, and pass it through the model to generate the output. For example, if you want to write a poem, you can set the user message as 'Write me a poem please' and let the model do its magic.

When compared to other existing AI language models, StableBeluga2 has its own unique characteristics. While some models might focus more on specific types of text generation or have different training datasets, StableBeluga2 stands out with its fine-tuning on the Orca-style dataset and its instruction-following capabilities. However, like all language models, it also has limitations. Since it's a new technology, its potential outputs cannot be predicted with certainty in all scenarios, and it may produce inaccurate or biased responses in some cases. So, developers need to perform safety testing and tuning according to their specific application requirements.

In conclusion, StableBeluga2 offers a great opportunity for users who are looking for an AI-powered text generation solution. With its proper usage and understanding of its capabilities and limitations, it can be a valuable tool in the realm of AI language models.